On-device AI is getting useful—and it changes speed, cost, and privacy

Phones and laptops can now run compact AI models locally. That shift reduces latency and sometimes cost, while keeping more data on your device. Just as importantly, it lets apps feel personal without constant network calls, which is useful anywhere reception is spotty or expensive.

The main benefit is responsiveness. Tasks like transcription, translation, or image editing feel instant when requests don’t leave the phone. Dictate a paragraph, refine it, and insert it into an email without a spinning loader. Snap a photo and get on-device categorization, alt text, or background removal before you even open a gallery app. When actions are measured in tens of milliseconds, you try more ideas—and stick with the tool longer.

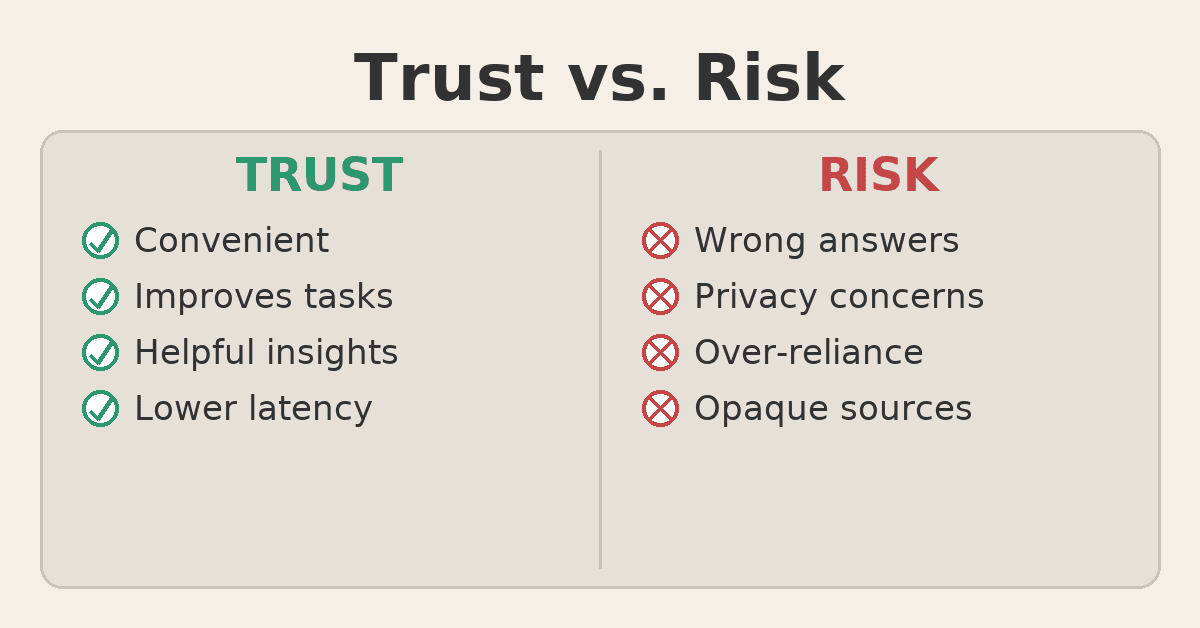

Privacy is another win. On-device features can analyze photos, voice notes, or messages without sending raw content to the cloud. That reduces exposure to breaches, lowers regulatory friction for companies, and builds user trust. For sensitive contexts—health notes, financial reminders, kids’ photos—keeping computation local can be the difference between adoption and refusal.

Privacy is another win. On-device features can analyze photos, voice notes, or messages without sending raw content to the cloud. That reduces exposure to breaches, lowers regulatory friction for companies, and builds user trust. For sensitive contexts—health notes, financial reminders, kids’ photos—keeping computation local can be the difference between adoption and refusal.

There are trade-offs. Smaller models can miss nuance, and long tasks still benefit from powerful servers. You might notice this in complex document summaries, multi-step reasoning, or high-resolution video edits, where local models can be slower or less accurate. Developers also wrestle with device fragmentation—different chips, memory limits, and thermal envelopes—which complicates testing and support.

Hybrid setups are emerging. Devices handle quick intents; the cloud kicks in for heavier jobs or when you opt in to richer results. Think of it like progressive enhancement: detect intent locally (“translate this,” “clean the audio”), then escalate to a larger model for advanced refinements or cross-document search. Clear controls and visible handoffs help users understand what stays local and what leaves the device.

Battery life matters too. Efficient scheduling—processing when plugged in or idle—helps keep all-day endurance intact. Modern runtimes throttle workloads, use low-precision math, and tap NPUs/GPUs only when they offer a net win. Apps can precompute embeddings while charging, then run realtime classification with minimal drain during the day.

As hardware accelerators spread, more everyday apps will quietly add local AI. Camera pipelines, note-taking, accessibility tools, and email clients will start by accelerating tiny features—smarter autocomplete, offline translation, contextual reminders—then expand as model efficiency improves. Users may notice the benefits long before they notice the label: faster taps, fewer spinners, richer results in airplane mode. The endgame isn’t “on-device vs. cloud,” but a seamless mesh where your device does what it’s best at, the network fills in the rest, and you stay in control of speed, privacy, and cost.

Career & Business

Career & Business Side Hustles That Can Turn Into Full-Time Businesses

Posted on 2025-10-03

Starting a side hustle is a popular way to explore passions, generate extra income, and test busines...

Personal Development

Personal Development The Power of Consistent Growth

Posted on 2025-09-01

Personal development is one of the most valuable lifelong investments a person can make. It is not a...